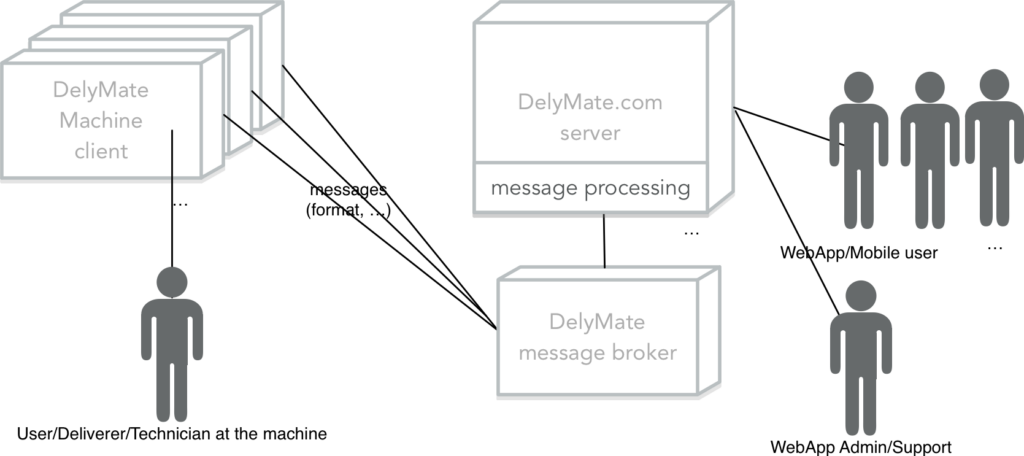

The Viaboxx DelyMate product consists of separate subsystems that need a communication infrastructure to exchange messages.

The DelyMate client is a Java/Groovy-program running on the delivery machine to open the lockers and to handle the interaction with the user at the machine, e.g. to process input from the touch screen.

The DelyMate server is a Java/Groovy-server to deal with users of the interactive web application and to exchange messages with the machines.

Viaboxx has experience in such kind of architecture. We choose a message-driven approach where the client machines and the server communicate with a offline-capable bidirectional message broker architecture like JMS. There are a few decisions to be made:

- the kind of message broker (protocol, provider) to use

- the format for message content (xml, json, binary) to be sent or received

- how to specify the communication-API to make it type-safe and easy to use

In short, I want to give some alternatives for the points 1-3 and show the decisions made for DelyMate and theirs pros and cons. At the end of this article I will give some implementation snippets written in Groovy.We wanted to keep the technical synergies between the software of the client and server high to reduce the development costs and to reduce the effort to incorporate new developers. We use the spring framework with spring-boot in both client and server, because of the knowledge of our company with it and the availability of many stable libraries to integrate communication protocols, security, persistence and other services that our software must provide.

Overview: Messaging infrastructure and users of DelyMate

- The Message Broker

In similar projects, Viaboxx used to select a JMS solution with a provider like ActiveMQ, SonicMQ, or IBM MQ. For DelyMate we wanted to try something faster and lighter – thus the decision was made to use RabbitMQ. It is not a JMS -compliant message broker, but it is known for its high performance, reliablity, and ease of configuration.

RabbitMQ is an open-source messaging solution written in Erlang. The RabbitMQ broker runs as a separate process – as a separate Docker-image (there is already a preconfigured Docker-Image for RabbitMQ available on https://hub.docker.com/r/library/rabbitmq/).

Between each machine and the server, a separate message queue is used to send messages in one direction and another message queue to receive messages. Because each machine has a unique identifier, e.g. M1, the queues are named:

- M1-to-server : to send messages from machine M1 to the server, the server establishes a message listener on that queue

- server-to-M1: to send messages from the server to machine M1, the machine establishes a message listener on that queue

Whenever a new machine is configured in the server, a new identifier is given and a new pair of queues is created in RabbitMQ without additional configuration effort.

This simple communication architecture makes it easy for the administration of pending messages and monitoring of message-throughput between a machine and the server in case of trouble.

- The Message Format

In other projects the preferred format for message bodies was XML. You could alternatively choose a binary protocol (e.g. with Google’s ProtoBuf library), if you need to make the message payload as small as possible. While XML messages are quite verbose, the benefit of XML is the readability and the well established tooling for parsing and integration with Java libraries.

For DelyMate, we decided to use JSON for the message payload in contrast to XML. JSON is also human-readable and slightly more compact than XML. It is easy to generate and parse, and the library support with Java is excellent.

- Specification of communication-API

The kinds of messages vary with the business logic that client and server must support. The client must subscribe for some data, like user logins, roles and permissions and some master data of the machines (e.g. the number of lockers and their layout).

The client sends messages to the server whenever a locker has been delivered or emptied by use of a PIN-code. The server must publish new PIN-codes, new users, etc., to the machines that need to have access to the data.

The idea is that a machine could be offline, but still supports all use-cases because the required data is already there and needs not be queried from the server by request-response while a user is waiting in front of the machine until the data arrives.

In other projects the typical solution was to create object types (classes) for each kind of message between clients and server and to create a specification, e.g. a XML-Schema to generate classes that assist in parsing and generating the message format.

The benefit of such a XML-Schema solution is that a documented API exists. But the disadvantage is that each new message requires an extension of the schema. Besides the effort to develop a specification for the messages, in practice you generate a separate package of classes for those messages and you need a layer to build/map the messages for calls between the communication partners. This is also code that has be written, tested, and maintained.

For DelyMate we wanted to find a solution that makes communication between the client and the server as easy as possible. We did not need a separate API-specification for the communication partners, because we use the same libs and team to develop both sides, and we can make sure that we deploy a new compatible version of clients and servers in equal release intervals. A couple of Java-interfaces should be the communication-API.

We could even make another simplification: both the clients and the server use a database and share the same database schema. So the domain model and the database tables are equal between the communication partners. The only difference is that the clients should not store all data, but only the data relevant for them, while the server should store more and act as a central integration platform.

Those simplifications helped use to create a generic communication-API, following those principles:

- Same domain model/database schema between the communication partners.

- The messages are specified from Java service interfaces. The message type is the service-name and method-name, the message body is the array of message parameters. Machine-identifier, service, method and parameters are formatted as a JSON message, implemented as properties of a plain class “RemoteMessage“.

- The creation and publishing/distribution of messages happens in a transparent way with the help of the spring-framework. Some implementation hints follow.

- The JSON is sent with RabbitMQ.

Message Publishing and Processing

To explain the way a message is created and sent we pick a message as part of a delivery process. The service interface has a method to mark an InsertTAN (= a PIN-code) as “used”. This must be saved in the client and in the server.

The service interface (which in reality contains additional methods) could be:

interface TanModifier {

@Publish

InsertTan updateInsertTanUsed(Key id, ZonedDateTime usedTime)

}

The parameters are the primary key of the InsertTAN (“id”) and the timestamp created on the client-side (“usedTime”) that must be stored in the database to mark the TAN as used.

The service that implements the TanModifier interface is:

@Component

@Modifier

class TanModifierImpl implements TanModifier {

InsertTan updateInsertTanUsed(Key id, ZonedDateTime usedTime) {

// load InsertTan by id from the database

// update the usedTime and store the change

// signal an event that insertTan has been used

}

}

We implemented two custom annotations:

@Modifier is used to mark a service that modifies data that we must synchronise between the client and server.

@Publish to configure the synchronisation, because a machine does not always need a change when data is involved that is not of interest for a specific machine. We only want to present the basic idea of the communication-API in this article, so we will not go into details about the @Publish annotation.

The TanModifier interface and the TanModifierImpl implementation exist on client and server. This means that the business logic to modify domain entities is developed only once and run equally on all communication partners. Thus, the API is type-safe and always up-to-date between clients and the server. There is no need to specify the messages and how they are created, no mapping between calls and messages, and no separate classes for message types.

How does this work?

Spring BeanPostProcessors

Client and server use the spring framework to autowire components/services and to inject dependencies between them. On the client-side and on the server-side there is a spring component that implements spring’s BeanPostProcessor interface. (Details about that can be found in the spring-docs: https://docs.spring.io/spring/docs/current/spring-framework-reference/core.html#beans)

On the client-side the implementation decorates every spring-component that is annotated as @Modifier with a dynamic proxy.

The proxy first delegates the call to the local implementation, then it creates a RemoteMessage from the service name, method name and method parameters and sends it as JSON with RabbitMQ to the server. This can be done in a few lines of code: (code snippet in Groovy, but you can imagine how to do it with Java)

class ModifierPostProcessor implements BeanPostProcessor, ModPublisher {

@Autowired

ApplicationEventPublisher eventPublisher

@Autowired

RabbitTemplate rabbitMQ

Object postProcessAfterInitialization(Object bean, String beanName) {

if (bean.class?.isAnnotationPresent(Modifier)) {

return createProxy(bean.class.interfaces, beanName, bean)

} else {

return bean

}

}

private Object createProxy(final Class[] interfaces, final String beanName, final bean) {

final machineIdentifier = // ... value from configuration

return Proxy.newProxyInstance(getClass().classLoader, interfaces,

new InvocationHandler() {

Object invoke(Object proxy, Method method, Object[] args) {

Object localResult

try {

localResult = method.invoke(bean, args)

} catch (InvocationTargetException ex) {

throw ex.targetException

}

if(!RemoteMessage.current)

eventPublisher.publishEvent(new RemoteMessage(

machineIdentifier, beanName, method.name, args))

return localResult

}

})

}

@TransactionalEventListener(fallbackExecution = true)

void publish(RemoteMessage message) {

rabbitMQ.convertAndSend(config.serverQueue, message)

}

}

On the server-side there is a similar BeanPostProcessor that delegates calls on Modifiers in the server to the machine(s) interested in the data. It is slightly more complicated because it also mentions the @Publish annotation to route calls to relevant machines only.

On the client and server is the same message consumer that receives the RemoteMessages created in the code above and forwards the call to the service implementation:

@Service

class MessageConsumer implements ChannelAwareMessageListener, BeanFactoryAware {

BeanFactory beanFactory

@Transactional

void onMessage(Message message, Channel channel) {

RemoteMessage rm

try {

rm = JsonMapper.instance.unmarshal(message.body, RemoteMessage)

} catch (Exception ex) {

channel.basicAck(message.messageProperties.deliveryTag, false)

return

}

if (rm) {

RemoteMessage.setCurrent(rm)

try {

Object service

try {

service = beanFactory.getBean(rm.service)

} catch (Exception ex) {

log.error("Cannot find $rm.service", ex)

}

if (service) {

try {

// Groovy-way to call method with reflection

if(rm.args != null) {

service."$rm.method"(*rm.args)

} else {

service."$rm.method"()

}

} catch (Exception ex) {

log.error("Error in service $rm", ex)

}

}

} finally {

RemoteMessage.clearCurrent()

channel.basicAck(message.messageProperties.deliveryTag, false)

}

}

}

The code receives the RemoteMessage, looks up the local service, and invokes the method with (or without) the arguments.

Basically, this is all we need to do to make remote-calls of methods synchronise the data for the clients and the server. For the application developer, the call of such service methods looks the same regardless of whether it is a local or a remote call. The services annotated with @Modifier are used within the services of the client, and the service and the proxy from the BeanPostProcessor wrapping them handles communication with remote partners transparently.

Example for the TanService in the client that uses the TanModifier:

@Service

@Transactional

class TanServiceImpl implements TanService {

@Autowired

TanModifier tanModifier

@Override

void deliver(Key insertTanId, int lockerNumber) {

Locker locker = boxService.findBoxByNumber(lockerNumber)

if (!locker) throw new IllegalArgumentException(

"Cannot find Locker $lockerNumber")

final ZonedDateTime now = ZonedDateTime.now()

InsertTan insertTan =

tanModifier.updateInsertTanUsed(insertTanId, now)

// business logic continues...

}

}

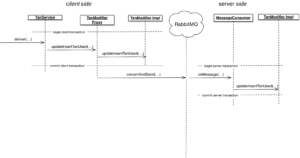

The sequence diagram illustrates the components involved when the deliver method is called on the client-side TanService:

We hope that this technical article gave some hints about the implementation of the DelyMate messaging communication with the spring framework.